A new player named Etched has emerged with a chip that's turning heads in the world of AI hardware. The Sohu chip isn't just another GPU – it's a bold bet that the future of AI belongs to transformer models. But in a landscape dominated by NVIDIA's all-purpose GPUs, why should we care about this newcomer?

Let's dive in and unpack what makes Sohu interesting, and why it might matter more than you think.

The AI Chip Conundrum

First, let's address the elephant in the room: NVIDIA's GPUs are really, really good. They're the Swiss Army knives of the AI world, capable of handling everything from image processing to natural language tasks.

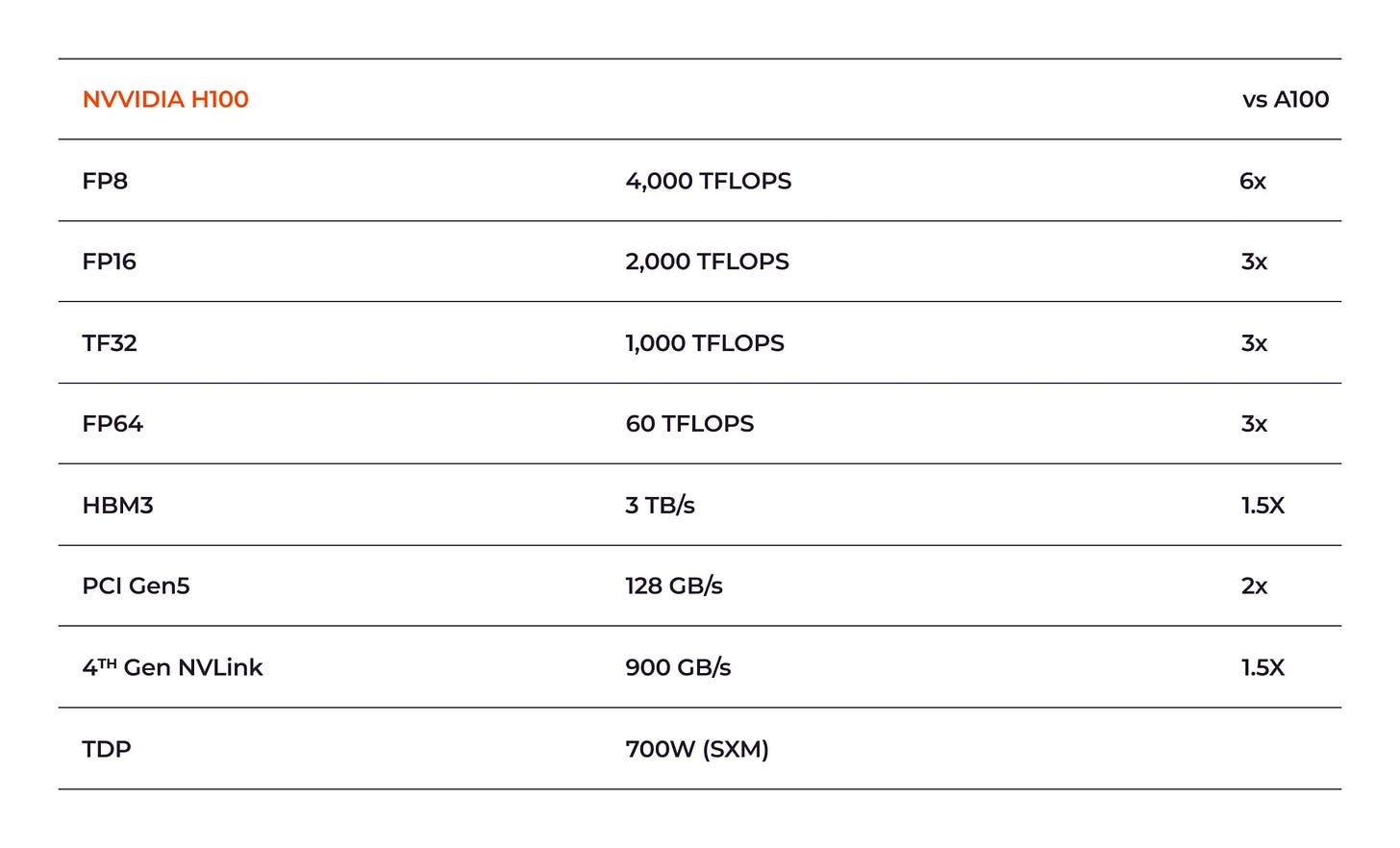

The A100, for instance, has been a workhorse for AI researchers and companies alike, powering everything from LLMs at OpenAI to diffusion models at Stability Diffusion. And its successor, the H100, is even more capable than the A100.

So why would anyone want to build a chip that does less?

Here's where Etched's thesis gets interesting. They're betting that the jack-of-all-trades approach is reaching its limits. Instead of trying to be good at everything, Sohu is laser-focused on being great at one thing: running transformer models.

It's a gutsy move, reminiscent of when Apple decided to ditch Intel and make its own chips. But is it smart?

The Transformer Takeover

To understand Etched's bet, we need to talk about transformers. These aren't the shape-shifting robots from your childhood – they're the AI models powering everything from ChatGPT to Google's latest search results. Over the past few years, transformers have gone from being just another AI architecture to the dominant force in the field.

Take Google's BERT, for example. BERT, which stands for Bidirectional Encoder Representations from Transformers, was introduced in 2018 and revolutionized natural language processing.

In simple terms, BERT is like a super-smart reader that can understand context in both directions – forward and backward – in a sentence. This ability allows it to grasp nuances in language that previous models missed, leading to significant improvements in tasks like question answering, sentiment analysis, and even Google's search results.

The Technological Marvel

Etched is essentially saying, "If transformers are the future of AI, why are we still using chips designed for general-purpose computing?"

It's a fair question, and Sohu is their answer.

The chip can process over 500,000 tokens per second on the Llama 70B model. That's 20 times more than an H100 server (23,000 tokens/sec) and 10 times more than NVIDIA's next-generation Blackwell B200 server (~45,000 tokens/sec).

These aren't just arbitrary benchmarks, either. They're based on running in FP8 without sparsity at 8x model parallelism with 2048 input/128 output lengths – the same methodology used by industry giants like NVIDIA and AMD.

So how does Sohu achieve this remarkable performance?

It's all about specialization. Sohu is an Application-Specific Integrated Circuit (ASIC), custom-designed for transformer models. By focusing solely on transformers, Etched can eliminate unnecessary components and optimize every aspect of the chip for its specific task.

Add to this the cutting-edge 4nm process technology from TSMC, and you've got a chip that's pushing the boundaries of what's possible in AI computing.

As Gavin Uberti, co-founder and CEO of Etched, explains: "We're able to burn the transformer algorithm into the chip. We're building our own silicon and our own servers, and this enables us to get more than 20 times higher throughput in terms of output words per second for models like GPT or Llama."

The Power Problem (and Solution)

While Sohu's performance is impressive, its energy efficiency is equally remarkable. AI is getting more powerful, but it's also getting more power-hungry.

For context, a 2019 study estimated that training a single large AI model can emit as much carbon as five cars in their lifetimes.

This is where Sohu really shines. Its energy consumption is astoundingly low, using only 10 watts of power while delivering its high performance. To put this into perspective, a single 8xSohu server can replace 160 H100 GPUs. That's not just an incremental improvement – it's a paradigm shift in AI computing efficiency.

The Economics of AI

The power efficiency of Sohu isn't just good for the environment – it's good for the bottom line too.

Today, AI models cost over $1 billion to train and are used for $10 billion or more in inference. At this scale, even a 1% improvement can justify a $50–100 million custom chip project. With Sohu promising performance improvements of 10-100 times over GPUs, the economic argument becomes compelling.

This mirrors the shift we saw in the cryptocurrency mining industry back in 2014, where it suddenly became more cost-effective to use specialized ASICs instead of general-purpose GPUs.

Could we be seeing the same trend play out in AI?

The Ecosystem Question

Of course, hardware is only half the battle. NVIDIA's real strength lies in its software ecosystem. CUDA, their parallel computing platform, is so ubiquitous that it's practically synonymous with AI development. Major frameworks like TensorFlow and PyTorch have deep CUDA integration, making NVIDIA GPUs the default choice for many researchers and companies.

Etched, recognizing this challenge, has developed its own software stack for Sohu. Unlike NVIDIA's proprietary CUDA, Etched's approach is to provide a complete, open-source software environment. This includes drivers, kernels, and a serving stack that's specifically optimized for transformer workloads.

The company's strategy here is twofold.

First, by open-sourcing their software, they're aiming to foster transparency and encourage adoption. Developers can dive into the code, understand how it works, and even contribute improvements.

Second, the specialized nature of their software—tailored specifically for transformer models—could potentially offer performance advantages over more general-purpose solutions.

However, Etched still faces an uphill battle. They're not just selling a chip; they're asking developers to adopt a whole new ecosystem. It's a classic chicken-and-egg problem: developers won't flock to Sohu without a robust ecosystem, but building that ecosystem requires developers.

The Bigger Picture

Step back, and you can see how Sohu fits into a larger trend in the tech world.

We've seen this story before: a new technology emerges, initially as a specialized tool, before gradually becoming a ubiquitous feature.

Take graphics processing, for example.

In the past, high-end visuals required specialized, expensive graphics cards. Companies like NVIDIA and ATI (now part of AMD) made their names selling these specialized tools.

But over time, basic graphics capabilities became integrated into standard computer processors. Today, most CPUs from Intel and AMD come with integrated graphics capable of handling everyday visual tasks, while dedicated GPUs still exist for high-performance needs like gaming or 3D rendering.

Etched is betting that AI acceleration will follow a similar path – from specialized hardware to a feature integrated into every chip. They're positioning Sohu as the high-performance solution for today's AI needs, particularly for transformer models.

But they're also anticipating a future where some level of AI acceleration is built into every chip, much like how basic graphics processing is now a standard feature in most CPUs.

The real question

It's not whether Sohu will succeed or fail. It's whether the future of AI hardware lies in flexible, general-purpose chips or in specialized accelerators tailored to specific architectures.

Etched has placed its bet. Now we wait to see how the chips fall.